Unsupervised Thoughts A blog on machine learning

Better Steering LLM Agents with LMQL

One of the most fascinating aspects of autoregressive large language models (LLMs) like GPT-3 is their ability to act through external tools. In this post, I’ll illustrate how LMQL (Beurer-Kellner et al., 2022), a new programming language for language model interaction, helps better steer such LLM agents. I’ll take ReAct (Yao et al., 2022) as an example and show how to enforce constraints on the task-solving trajectory, the choice of tools and the tools’ inputs.

- Tool-augmented LLMs and ReAct

- Enforcing the ReAct Structure with LMQL

- Restricting the Choice of Tools

- Constraining the Tools’ Inputs

- Defining more Robust Action Plans

- Cost Considerations

- Conclusion

- References

Tool-augmented LLMs and ReAct

Taken in isolation, LLMs know at most what they have been shown during their training and can only generate text. However, external tools can give them access to external knowledge sources and enable them to act in the real world. Such tools can be a web search engine, a calculator, a Python interpreter, an email client…

Following the ReAct paper, we’ll imagine in the following example that we try to answer questions thanks to two tools:

search(entity)returns the first sentences of the Wikipedia page ofentity(or suggests the most similar entities ifentitydoesn’t have a Wikipedia page);lookup(string)returns the next sentence containingstringin the last page accessed with thesearchtool.

Let’s now see how the ReAct prompting method works:

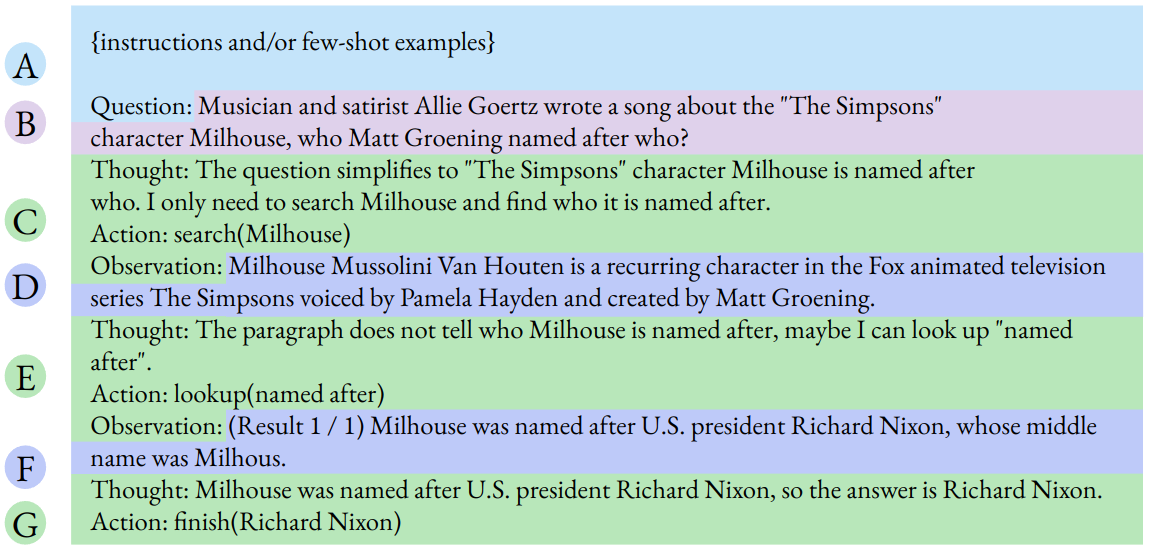

- We initiate a prompt by showing the expected format of a task-solving trajectory through few-shot examples or explicit instructions A . This task-solving trajectory is composed of thoughts (paragraphs starting with “Thoughts: “), actions (“Action: “ followed by the name of the tool to use and its input or “Action: Finish” followed by the final answer in parentheses) and, just each non-final action, observations (“Observation: “ followed by the output of the preceding action);

- We include the question in the prompt B ;

- We let the LLM generate the continuation of the prompt until we detect either the “Observation:” substring C E or the “Action: Finish” substring G ;

- If the substring detected at Step 3 is “Observation:”, we parse the generated text and extract the preceding action and its input. We then use the corresponding tool to generate the output, append it D F to the preceding text and go back to Step 3.

- If the substring detected at Step 3 is “Action: finish”, we extract and return the final answer G .

Although very simple and easy to implement, ReAct yields good results with large enough language models. However, this method relies on the LLM rigorously following the prescribed format. In practice, for example with Langchain’s implementation of ReAct, the LLM may deviate from it and even hallucinate imaginary tools, which prevents its output from being parsed. And this is where LMQL comes into play…

Enforcing the ReAct Structure with LMQL

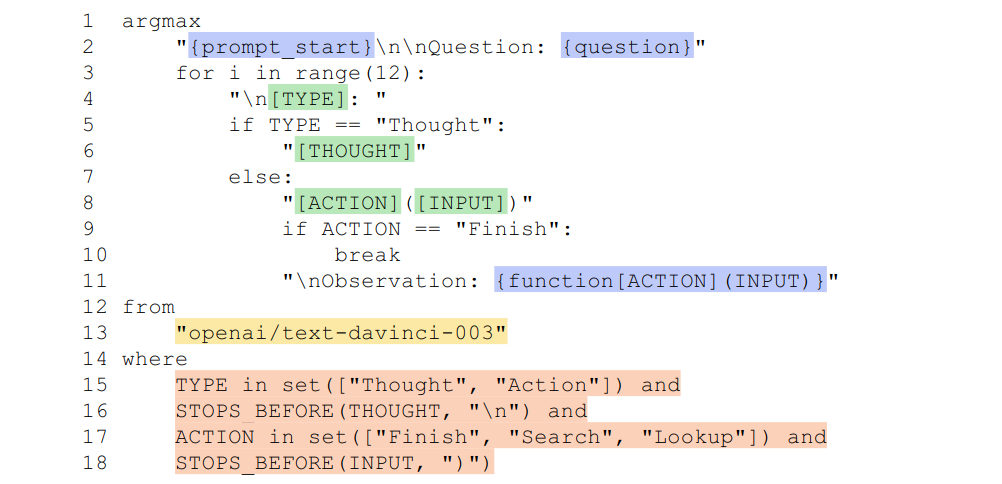

LMQL is a declarative query language for LLMs. With LMQL queries, developers can concisely and intuitively specify the constraints applicable to a sequence of text generation steps. For example, the LMQL query below implements the ReAct method:

I invite you to learn about the LMQL syntax through the LMQL documentation and examples. In a nutshell:

argmaxdenotes a greedy decoding strategy for the text generation;- The block between

argmaxandfromspecifies the structure of the text to create. It includes some Python code responsible for the control flow and top-level strings that are progressively added to the text as they appear; - The strings can include bracketed variables, e.g.

[X]. At this location, the LLM will generate some text that will be stored in a variable namedX; - The strings can also include Python statements in curly brackets. These statements are replaced by their values when the corresponding string is added to the text;

- The

fromclause specifies which model to call; - The

whereclause defines constraints on the variables fed by the LLM. For example, theSTOPS_BEFORE(X, c)constraint interrupts the text generation for variableXwhen charactercis generated.cis discarded so thatXdoes not end withc.

Assuming that you have previously defined the Python strings named prompt_start and questions, as well as a Python dictionary mapping the strings “Search” and “Lookup” to the corresponding functions, this LMQL query is all you need to run ReAct.

It is concise and easy to understand. Furthermore, as opposed to the LangChain ReAct implementation, it guarantees that the output text follows the expected format. For example, the LLM cannot invent new actions.

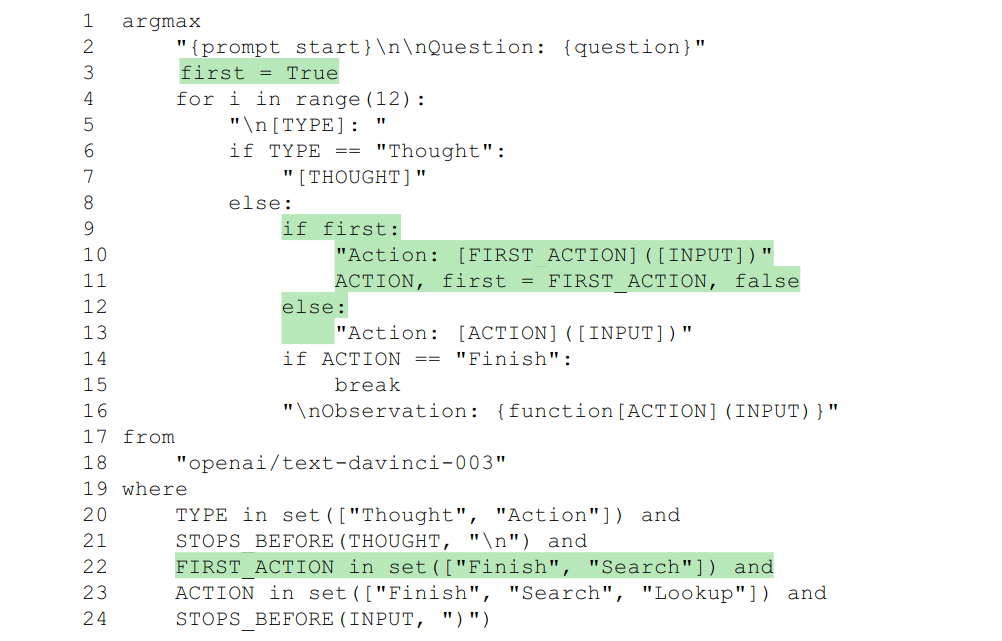

Restricting the Choice of Tools

The tools of the ReAct agent may not be relevant or available at all times. For example:

- Some tools may consume some resources and we may want to prevent their use if our resources are below a certain level;

- Asking the user a question can also be a “tool”. It would be reasonable to stop raising such questions if the user declined to answer a previous question or did not answer within a certain frame;

- In the previous section, the

lookuptool provides valid results only if thesearchtool has been used at least once.

In all these cases, such restrictions can be specified with LMQL. For instance, the LMQL query below corresponds to the same ReAct agent as before but with the lookup tool not available for the first action (changes in green).

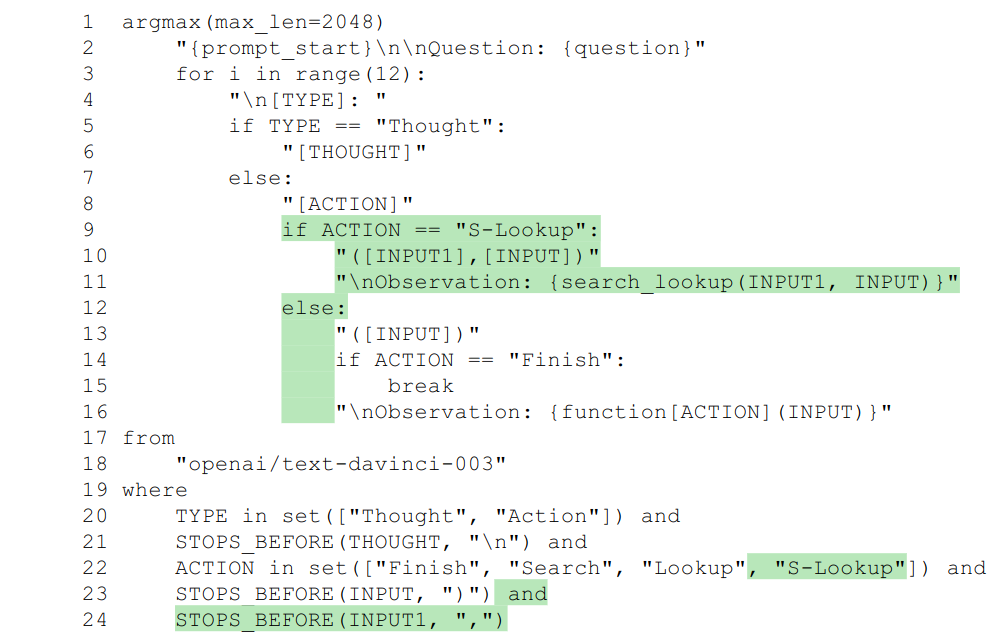

Constraining the Tools’ Inputs

A tool in Langchain is implemented through a string-to-string function that computes the “observation” provided to the LLM. However, in many cases, the input should not be an arbitrary string. This string may be expected to take only one of a few predetermined values or to be formatted in a very specific way, for example following a JSON schema.

Such constraints, which may not be satisfied in Langchain, can be enforced with LMQL. For this, we can simply condition on the value of the ACTION variable, specify the structure of the input string and add the right constraints on the corresponding variables.

In the example below (changes in green), we imagine that we have a new tool search-lookup that combines search and lookup in one function and takes two arguments (the entity of the Wikipedia page and the word to look up). In this case, we of course need to adjust the instructions or the few-shot examples so that the LLM considers the use of the new tool.

Defining more Robust Action Plans

The last example covered in this blog post concerns the OpenAPI hierarchical planning agent implemented in Langchain.

This agent is designed to accomplish high-level tasks with multiple API calls in the context of many potential API endpoints. It combines a planner responsible for listing the endpoints to call in the right order and a controller responsible for calling these endpoints.

In the example in the Langchain documentation, the user query is “make me a playlist with the first song from kind of blue. call it machine blues” and the planner is provided with the Spotify OpenAPI specification.

In this situation, a planner based on gpt-4 devised the correct plan below while a planner based on text-davinci-003 includes invalid endpoints:

1. GET /search to search for the album "Kind of Blue"

2. GET /albums/{id}/tracks to get the tracks from the "Kind of Blue" album

3. GET /me to get the current user's information

4. POST /users/{user_id}/playlists to create a new playlist named "Machine Blues" for the current user

5. POST /playlists/{playlist_id}/tracks to add the first song from "Kind of Blue" to the "Machine Blues" playlist

Here it is of course simple to create a planner with LMQL that only references valid endpoints and follows the expected format (in this case, this is however not enough to build a successful plan…), as demonstrated in this notebook.

Cost Considerations

Constraining the text generation creates a computational overhead. This is negligible with locally-hosted models and simple constraints as those used in this blog post.

This is unfortunately not true when calling the OpenAI API. All things being equal, enforcing constraints leads to additional calls and all the tokens, even those previously fed to or generated by the API, are billed. This increases the API costs, as shown in this notebook.

Of course, guaranteeing that the constraints are satisfied may reduce costs. For example, it can help achieve comparable performance with shorter prompts or less expensive models. It can also prevent generating texts that are unparseable and thus useless. Finally, the LMQL team is currently working on a cache layer that will decrease the number of requests.

Conclusion

LMQL is a promising tool to easily develop more predictable LLM agents and potentially make them safer and more beneficial. However, with the way the OpenAI API is currently designed and billed, this increased robustness may come with higher inference costs. Hopefully, the LLM providers will adapt their offerings and new powerful open source models will be made available so that users can take full advantage of tools like LMQL.

Many thanks to Luca Beurer-Kellner for his feedback and more generally to him and his colleagues for building LMQL.

References

- Beurer-Kellner, L., Fischer, M., & Vechev, M. (2022). Prompting Is Programming: A Query Language For Large Language Models. PLDI ’23.

- Yao, S., Zhao, J., Yu, D., Du, N., Shafran, I., Narasimhan, K., & Cao, Y. (2022). ReAct: Synergizing Reasoning and Acting in Language Models. ArXiv Preprint ArXiv:2210.03629.